The world, how was it generated?

Everybody has an opinion about AI. It’s going to steal our jobs, but also let us all work three hours a day and spend the rest of the time painting and writing poetry. It’s going to destroy the economy, but at the same time make everybody richer and healthier.

The fact that very smart people fundamentally disagree about these matters illustrates how difficult they are to predict. Regardless of this, AI research is going on in earnest. Researchers the world over are thinking deeply about how machines can learn to excel at complicated tasks.

With such deep introspection into intelligence comes insight about how our understanding of the world is built. This insight, which I hope to share here, is relevant to both art and science. These are both areas where we ask the question: how was the world generated?

What is AI?

Artificial intelligence research, broadly speaking, seeks to build computers that are smart like people. What makes people smart, then?

Well for one, if I gave you a photograph, you could easily tell me what was taking place in it. This is a typical recognition problem, since you can “recognise” what’s going on in the image. We would like computers to be able to do this recognition too, and in fact this is half of the problem of AI.

The other half? Generation. This is the opposite of recognition: if I gave you a short sentence, you could draw (or “generate”) a picture of what I had described.

Images generated by a neural network. From a paper by Anh Nguyen et al.

Recognition problems involve distilling a complicated piece of data down to a key insight. Generation problems involve scaling a simple piece of information up to something more complicated. Note that the distinction is subjective, since to me translating Japanese text to English is a recognition problem, whereas my Japanese friend might disagree.

To give you a sense of what’s possible right now, the above images of volcanoes are completely synthetic and were generated by a neural network. A different kind of neural network would have no problem recognising these images as volcanoes or mountains.

Recognition-generation loops

So AI problems can be split into those of recognition and those of generation. Is this a complete description? Or is there any aspect of intelligence that it misses?

Let’s take me as an example. If I’m feeling cynical, I can characterise myself as a black box that takes input and converts it into output. My input is stimuli from the outside world, such as sight and sound, and my output is a response to those stimuli, which is hopefully beneficial to my survival. Let’s rephrase this in terms of our formalism: I recognise external stimuli and generate my response.

By thinking in these terms, you can convince yourself that everything that a human being does boils down to either recognition or generation. How does this picture fit with classic measures of intelligence such as strength of memory, learning speed or ability to predict the future? These can be seen as qualities which improve an agent’s generative and recognition abilities. For example if I can remember everything that happened to me in the past, I may be able to use that information to generate better plans in the future.

This characterisation of intelligence is suggestive of a path towards building general artificial intelligence: we should build systems with ever better recognition and generative abilities. One day we can perhaps stitch together these recognition and generative abilities into a loop, and have something that resembles our own intelligence.

The intelligent artist

When Feynman died, scrawled across the top of his blackboard at Caltech were the words,

"What I cannot create, I do not understand."

People working in AI and machine learning just LOVE this quote. When I first heard it, I associated it with theoretical physics. If I could not construct a theory myself from first principles, did I really understand it? If all I did was read about a theory in a book, it was easy to trick myself into thinking that I’d understood it. Really I was just learning to recognise the theory. The acid test of understanding was whether a week later I could reconstruct the theory without looking at notes: whether I could generate it.

Recognising and generating a theory are different in some fundamental way. Being good at one does not automatically make you good at the other. This actually applies to everything. And art provides a beautiful venue to illustrate this.

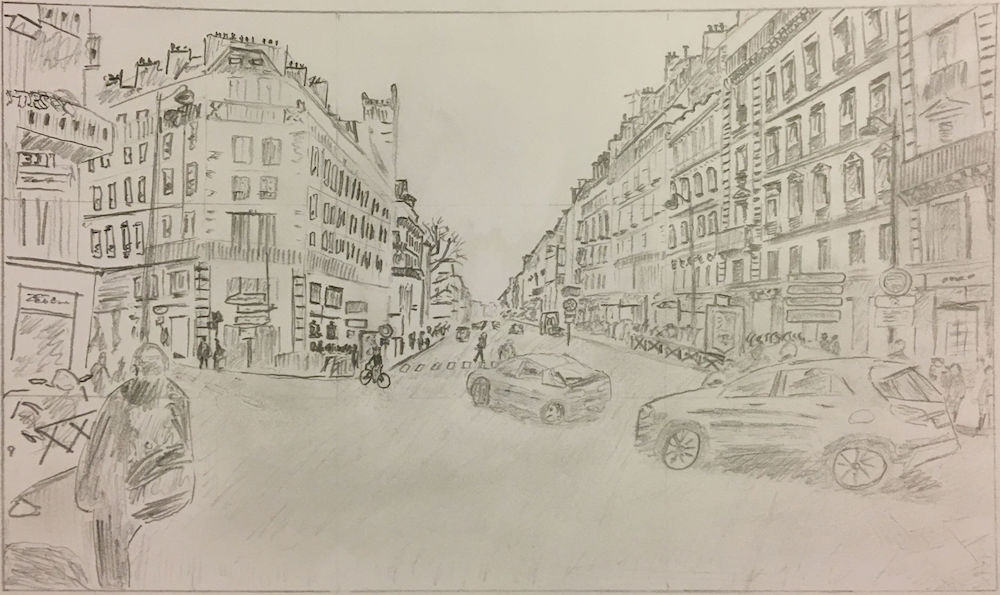

Here’s a photo looking down a street in Paris. It’s easy to see what’s going on in the photo. There’s a man in the foreground, a couple of cars, and the street extends off into the distance.

But now what if I told you, “Draw a picture of a man and a couple of a cars in a Parisian street extending off into the distance.” Could you do it? In particular could you make the perspective look right? Could you make it look like Paris?

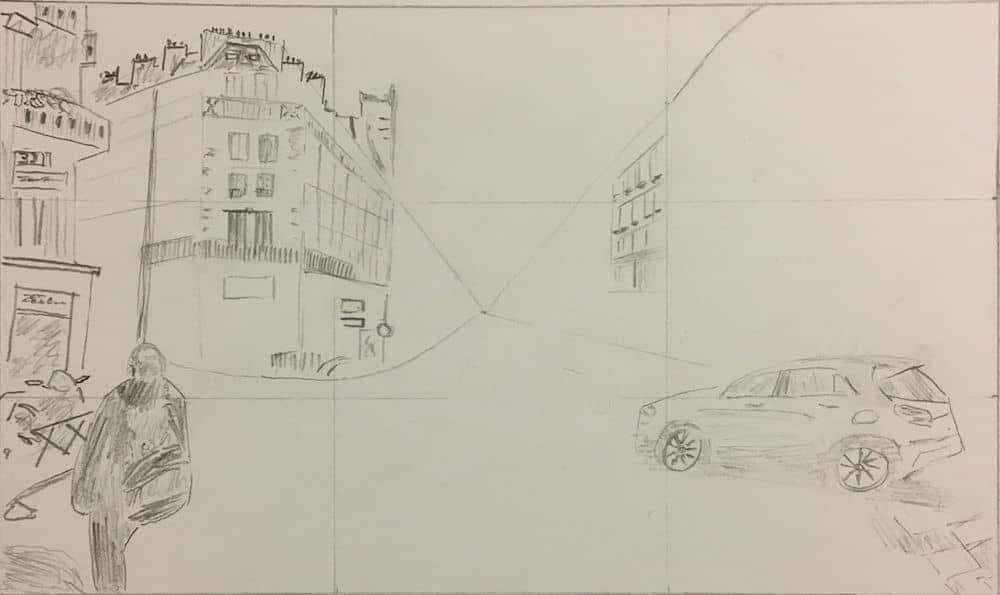

It’s hard. But what I want to persuade you of in the remainder of the post is that learning to generate something, like a drawing, is extremely worthwhile. The artist gains an understanding of aspects of the visual world that otherwise might go unnoticed. To begin, here’s my attempt at drawing the Paris picture:

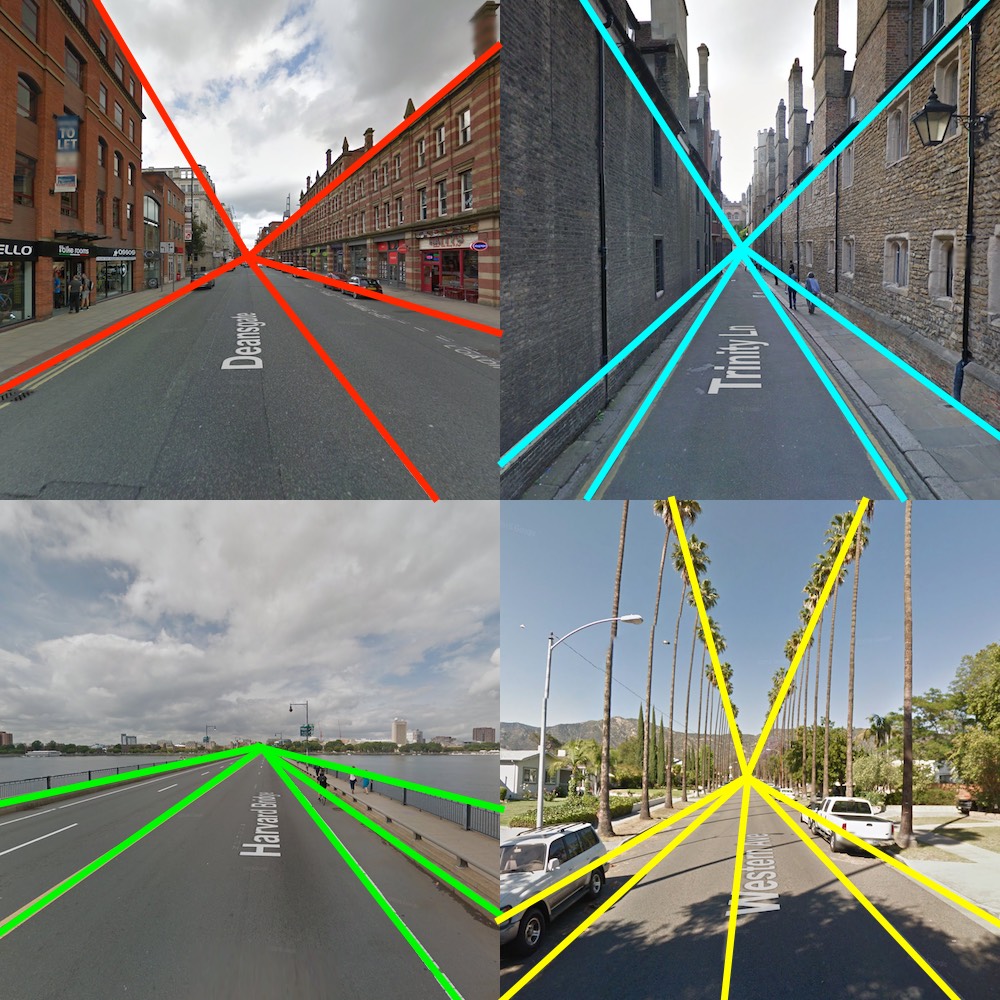

Notice how the tops of the houses and the sides of the road meet at a point in the middle of the image. This is actually completely general. In any photograph of lines that are parallel in the real 3D world, in the image they meet at a point. Here are some more examples, with construction lines that you can .

the construction lines

This geometrical fact is suggestive of a way to draw a city street: start by drawing a cross, then fill in the road in the bottom section, and the houses on the left and right. Indeed this is how I drew the picture of Paris. Drag the slider below to see how the image was composed.

Move the slider to see how the Paris image was generated

So what have we learnt? The artist would say that we have learnt how to draw in perspective – we have learnt how parallel lines meet at the vanishing point. The mathematician would say we have learnt about projective geometry, and how parallel lines can meet at the point at infinity. What would the AI researcher say? They would say that by learning a generative model of images (i.e. learning to draw) we have gained an insight into art and mathematics that could easily have been missed had we only ever learnt to recognise what we see, but not generate it.

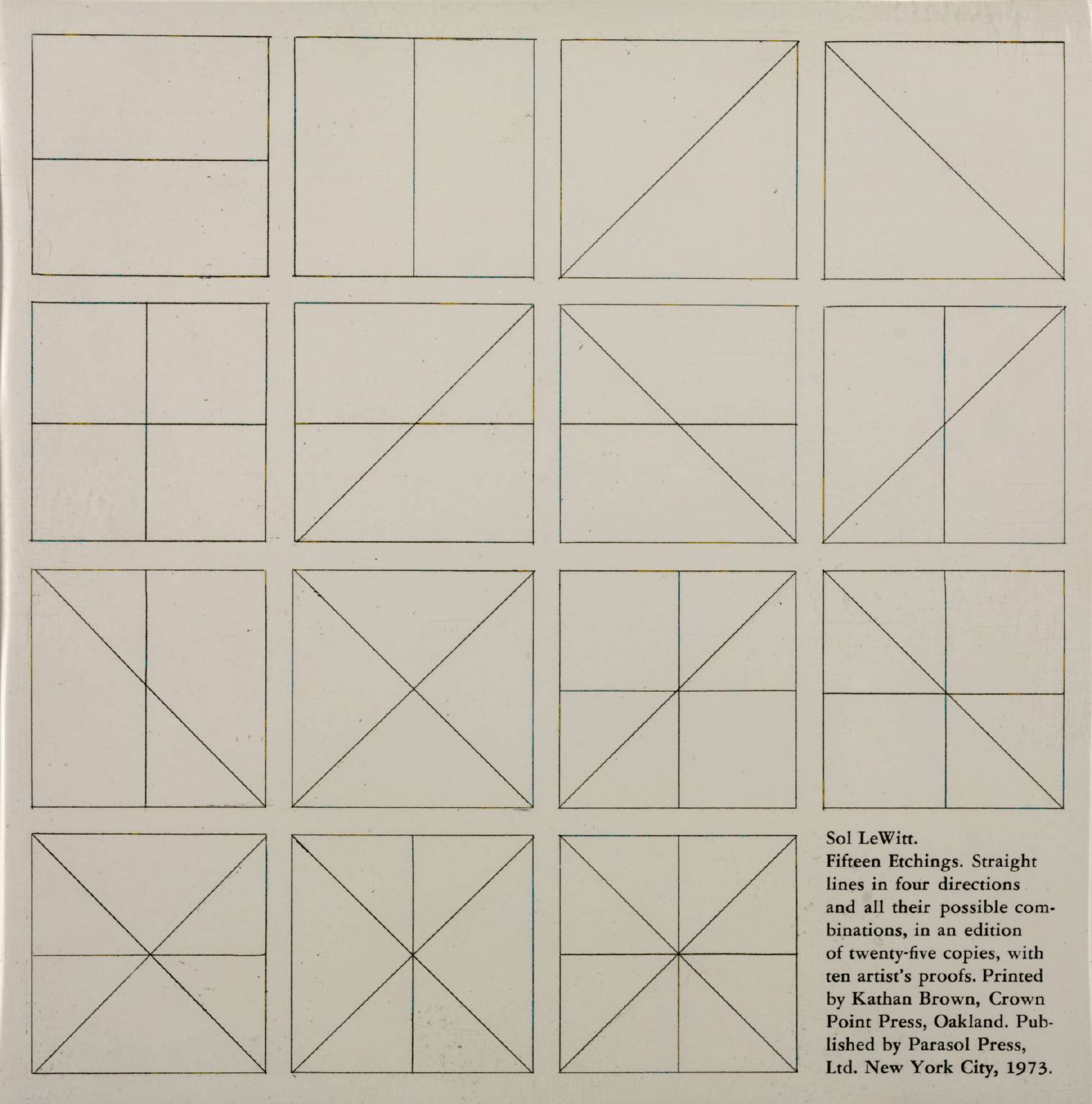

This importance of generative models in art surfaced very clearly in the conceptual art movement of the 60’s and 70’s. According to Sol LeWitt:

"In conceptual art the idea or concept is the most important aspect of the work. When an artist uses a conceptual form of art, it means that all of the planning and decisions are made beforehand and the execution is a perfunctory affair. The idea becomes a machine that makes the art."

LeWitt would specify

"Each person draws a line differently and each person understands words differently."

This was all part of the art – when LeWitt wrote down his guidelines, he would have to consider the recognition and generative abilities of the draftsman reading them. As an example, one set of guidelines was “straight lines in four directions and all their possible combinations”. And below is an implementation of these guidelines. See if you can spot our generative model for Parisian streets included as a special case.

© The estate of Sol LeWitt

Afterthoughts

In this post I wanted to introduce recognition and generative models, and show how they are intimately linked to intelligence. I wanted to hint at the power of learning generative models.

I chose the example of learning to draw perspective because of its beautiful links to art and mathematics. Indeed perspective in art is often used as motivation for moving beyond Euclidean geometry, and to study more exotic geometries. Such geometrical structures underlie some of our most profound theories in physics: general relativity and quantum field theory. In one light, these successes of theoretical physics can be viewed as an effort to learn a generative model for the universe.

I hope to convey that there is more to machine learning than just a hunt for the big buck and robots that do cool things. In fact the subject holds a beauty that goes to the very core of why we do science, and how we create art. From string theory to Sol LeWitt, we ask “the world, how was it generated?”