Objective

I aim to develop the mathematical foundations of artificial intelligence, with an emphasis on neural networks. This work will enable the principled design of a next generation of more efficient and reliable learning systems.

My research seeks first-principles solutions to questions that lie at the heart of deep-learning based artificial intelligence. For instance:

- How should a neural network be trained?

- How does a neural network make predictions about unseen data?

Satisfying answers to these questions call for fundamentally new mathematical models—although these models will leverage old tools from numerical analysis, functional analysis, statistics and geometry.

The applications of this research include learning algorithms that are more automatic and require less manual tuning, and more principled approaches to uncertainty quantification in deep networks.

Focus areas

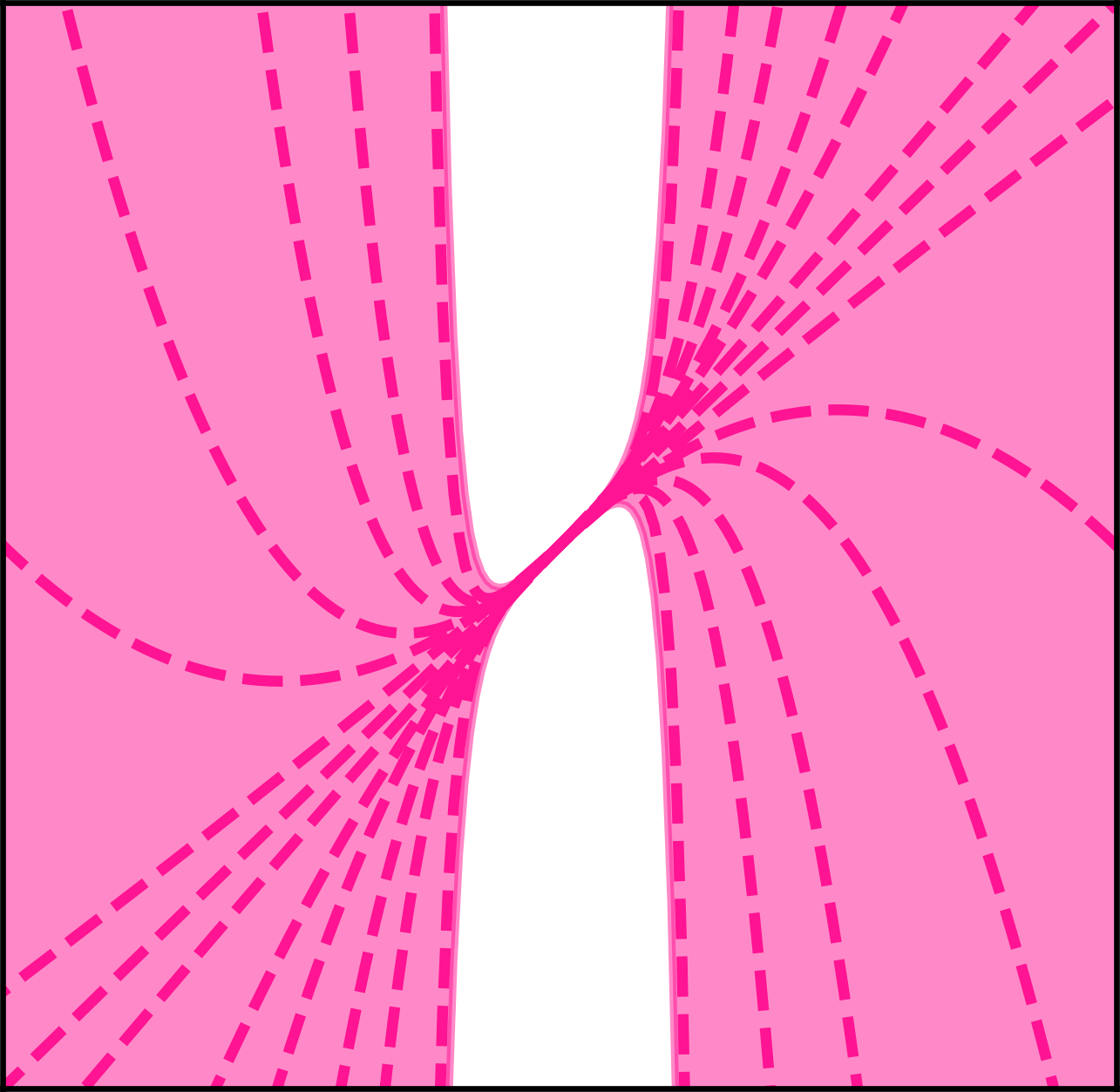

1 Neural Architecture Aware OptimisationToday's popular deep learning optimisers neglect the operator structure of neural networks. As a result, they require lots of manual tuning on every new task. We proposed and continue to develop a framework for designing architecture-dependent learning algorithms. For instance, the framework allows you to formally derive how learning rate should scale with network depth. The goal is to make costly hyperparameter tuning a thing of the past.

Euclidean trust

Without consensus on what mechanism drives generalisation in deep learning, it is difficult to design principled methods for uncertainty quantification. To address this problem, we created a technique called normalised margin control to demonstrate the strengths and weaknesses of different learning theories. These results led us to develop a new theoretical connection between max-margin neural nets and Bayes point machines. Next up, I am excited to apply normalised margin control to develop more principled methods for uncertainty quantification in deep networks.

3 Resource Constrained Learning & NeuroscienceIt is amazing what the human brain—running at 20 watts—can accomplish, in contrast to an entire Google data-centre. My research looks at how learning algorithms can be made more time and memory efficient. For instance, we showed that machine learning can still theoretically converge using only 1-bit gradients. Furthermore, it can make efficient use of weights stored in a log number system, mirroring neuroscience. My ultimate goal is to reduce the carbon footprint of artificial intelligence workloads.

Selected publications

|

Automatic gradient descent: deep learning without hyperparameters Jeremy Bernstein*, Chris Mingard*, Kevin Huang, Navid Azizan & Yisong Yue [arxiv] [code] [bibtex] preprint |

|

@article{agd-2023, author = {Jeremy Bernstein and Chris Mingard and Kevin Huang and Navid Azizan and Yisong Yue}, title = {{A}utomatic {G}radient {D}escent: {D}eep {L}earning without {H}yperparameters}, journal = {arXiv:2304.05187}, year = 2023}

|

|

|

Optimisation & generalisation in networks of neurons Jeremy Bernstein [arxiv] [code] [bibtex] PhD thesis |

|

@phdthesis{bernstein-thesis, author = {Jeremy Bernstein}, title = {Optimisation \& Generalisation in Networks of Neurons}, school = {California Institute of Technology}, year = 2022, type = {{Ph.D.} thesis}}

|

|

|

Kernel interpolation as a Bayes point machine Jeremy Bernstein, Alexander R. Farhang & Yisong Yue [arxiv] [video] [code] [bibtex] preprint |

|

|

@article{bpm, title={Kernel interpolation as a {B}ayes point machine}, author={Jeremy Bernstein and Alexander R. Farhang and Yisong Yue}, journal={arXiv:2110.04274}, year={2022}}

|

|

|

Investigating generalization by controlling normalized margin Alexander R. Farhang, Jeremy Bernstein, Kushal Tirumala, Yang Liu & Yisong Yue [arxiv] [code] [bibtex] ICML '22 |

|

|

@inproceedings{margin, title = {Investigating generalization by controlling normalized margin}, author = {Alexander R. Farhang and Jeremy Bernstein and Kushal Tirumala and Yang Liu and Yisong Yue}, booktitle = {International Conference on Machine Learning}, year = {2022}}

|

|

|

Fine-grained system identification of nonlinear neural circuits Dawna Bagherian, James Gornet, Jeremy Bernstein, Yu-Li Ni, Yisong Yue & Markus Meister [arxiv] [code] [bibtex] KDD '21 |

|

|

@inproceedings{sysid, title = {Fine-grained system identification of nonlinear neural circuits}, author = {Dawna Bagherian and James Gornet and Jeremy Bernstein and Yu-Li Ni and Yisong Yue and Markus Meister}, booktitle = {International Conference on Knowledge Discovery and Data Mining}, year = {2021}}

|

|

|

Computing the information content of trained neural networks Jeremy Bernstein & Yisong Yue [arxiv] [video] [code] [bibtex] TOPML '21 contributed talk |

|

|

@inproceedings{entropix, title={Computing the information content of trained neural networks}, author={Jeremy Bernstein and Yisong Yue}, booktitle={Workshop on the Theory of Overparameterized Machine Learning}, year={2021}}

|

|

|

Learning by turning: neural architecture aware optimisation Yang Liu*, Jeremy Bernstein*, Markus Meister & Yisong Yue [arxiv] [poster] [video] [code] [bibtex] ICML '21 |

|

|

@inproceedings{nero, title = {Learning by turning: neural architecture aware optimisation}, author = {Yang Liu and Jeremy Bernstein and Markus Meister and Yisong Yue}, booktitle = {International Conference on Machine Learning}, year = {2021}}

|

|

|

Learning compositional functions via multiplicative weight updates Jeremy Bernstein, Jiawei Zhao, Markus Meister, Ming-Yu Liu, Anima Anandkumar & Yisong Yue [arxiv] [poster] [video] [code] [bibtex] NeurIPS '20 |

|

|

@inproceedings{madam, title={Learning compositional functions via multiplicative weight updates}, author={Jeremy Bernstein and Jiawei Zhao and Markus Meister and Ming-Yu Liu and Anima Anandkumar and Yisong Yue}, booktitle = {Neural Information Processing Systems}, year={2020}}

|

|

|

On the distance between two neural networks and the stability of learning Jeremy Bernstein, Arash Vahdat, Yisong Yue & Ming-Yu Liu [arxiv] [poster] [video] [blog] [code] [bibtex] NeurIPS '20 |

|

@inproceedings{fromage, title={On the distance between two neural networks and the stability of learning}, author={Jeremy Bernstein and Arash Vahdat and Yisong Yue and Ming-Yu Liu}, booktitle = {Neural Information Processing Systems}, year={2020}}

|

|

|

signSGD: compressed optimisation for non-convex problems Jeremy Bernstein, Yu-Xiang Wang, Kamyar Azizzadenesheli & Anima Anandkumar [arxiv] [poster] [slides] [video] [code] [bibtex] ICML '18 long talk |

|

@inproceedings{signum, title = {sign{SGD}: compressed optimisation for non-convex problems}, author = {Bernstein, Jeremy and Wang, Yu-Xiang and Azizzadenesheli, Kamyar and Anandkumar, Animashree}, booktitle = {International Conference on Machine Learning}, year = {2018}}

|

|